Redundant in my 20’s?: Speculation from a young insights professional at the dawn of the AI epoch

As one researcher portentously said to me recently – “What if I don’t want to be a prompt engineer?”

This week, Open AI introduced Deep Research. ‘What’s new’, you ask?; ‘ChatGPT has been around for 12 months now, and we’ve all been using it for research tasks here and there’. What’s new is its ability to synthesize data using reasoning – showcased by its clarity-seeking interrogation when you submit a request – and a superior understanding of how to exclude, arrange and highlight data in actually quite useful ways. It also reports an impressive new high score in data accuracy, beating GPT 4.0 and competitor models on Humanity’s Last Exam (which tests models on expert-level questions). Though it only pulls in at 26.6% accuracy on the exam, this must be contextualised – who amongst us could similarly correctly answer 1,000 of the required 3,000 PHD-level multi-choice questions (spanning humanities, science, mathematics, and more)? Not I.

This debut comes straight off the back of China’s DeepSeek launch, which sent shockwaves around the global economy (hold on to your hats, NVIDEA investors). The provision of a free (disregarding the hidden costs of surveillance capital for now), high-quality open-source AI model changes the game for everyday consumers and researchers alike. Its claims of unparalleled cost and energy efficiency serve to further scythe the logistical barriers standing in the way of wider AI adoption.

Returning to the point, what’s new? What’s new is that we’ve crossed a key milestone in the unfurling of the AI epoch, with resonant implications for research careers. Open-source models and high-quality chat-style UI’s mean that impact on how we do research is about to become exponential. Labour which can be accomplished by LLM’s, will shortly be accomplished by LLM’s. And this is before we even begin to tackle the prospect of AGI (Artificial General Intelligence). Controversial as it may be, with highly variant forecasts of what it can do and by when, AGI will be developed in the not-too-distant future. And when it is, the nature, structuring, and value of human labour will be unequivocally changed. In the meantime, we can stay grounded with what’s in front of us: unprecedented AI research tools, with more on the way.

The reality is that these developments threaten all of us in insights and research – but no-one more than our junior researchers cutting their industry teeth on data collection, collation, and analysis. This is because the kinds of functionality Deep Research and its ilk offer are similar to those we currently ask of research interns, assistants and associates; hunting down information online, synthesizing across sources, comparing and contrasting data points, summarising major takeaways, taking notes in meetings and interviews, pulling consumer quotes from transcripts, translating data between languages, finding images to illustrate a point. I have previously used AI assistance for most of these tasks, speaking as an associate myself. It is a strange thing to be complicit in one’s own potential redundancy.

Discourse around AI innovation for research often leverages the idea of ‘freeing you up from time-consuming tasks’ so that you can ‘focus on the real work’. But what if your job is largely these tasks? What if you’re a junior still laying necessary foundations so you can one day get to the ‘real work’?

Our industry stands at the precipice of a crucial deliberation; how can we nurture fresh talent during this era of unprecedented, exponential change? What should we ask of junior researchers, how should we train them, when many of the technical skills previously required are about to go out the window? As agencies hustle to develop proprietary in-house AI tooling (thus side-stepping previously inhibiting fears about data confidentiality), it is increasingly urgent to develop an industry POV on this matter.

As we roll-out AI into the core of our work, we should simultaneously roll-out a development precedent and pipeline for our industry’s youth. It should take into account that juniors have the most to lose; namely, opportunities to develop valuable people management skills that (for now) are less threatened by AI replacement. However, they also have the most to gain – by staying sharp on the forefront of AI-enabled research, juniors might stand to become experts in a new era of research.

To help our juniors become industry experts, rather than redundant, I propose the following:

Transparent dialogue

A Gen Z fave – and for good reason. Without fear mongering, it’s time we address the implications before consequences befall us. It doesn’t do our juniors justice to not address how their development specifically is implicated in AI developments. As a result, we must begin managing expectations that traditional industry development pathways are time-limited, and that ‘emergent’, ‘new’ and ‘cutting-edge’ is the new normal. This will necessitate a radical gear shift from juniors doing the typical leg-work of writing screeners and the like – AI will soon manage the bulk of this, and we must set expectations accordingly. To indulge a metaphor, ‘The ship has changed course, make sure you’re ready to dock at a different port’.

Redefining value

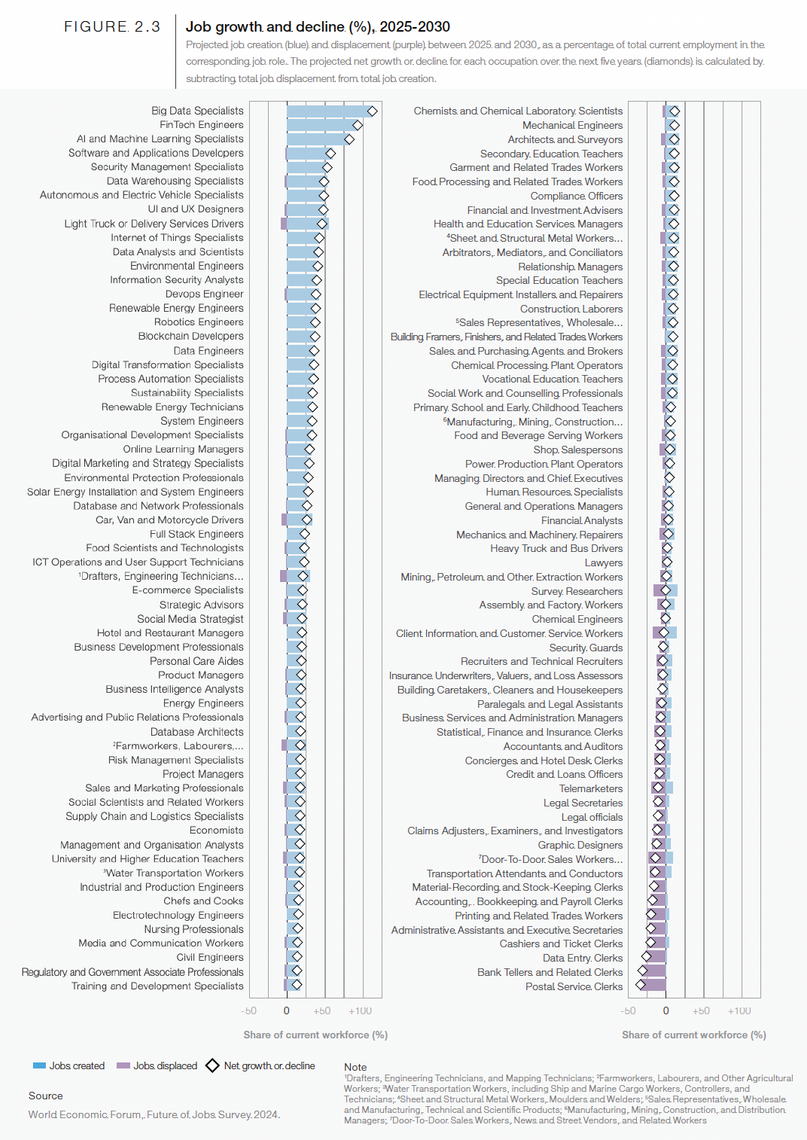

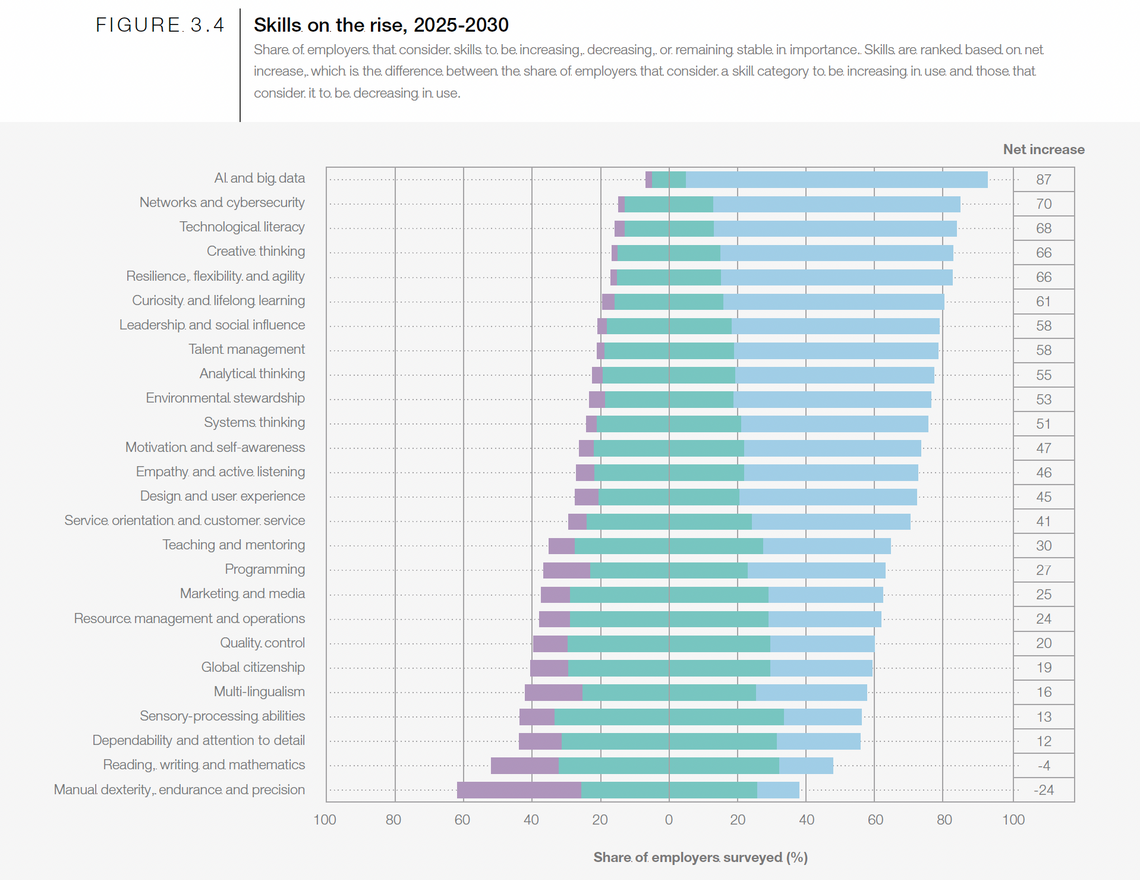

We must get clear on what will instead become valuable in junior research roles and make a plan about how to help juniors get there. A starting point would be to review the World Economic Forum’s freshly released Future of Jobs 2025 report:

Survey researchers are at risk, but strategic advisors stand to gain. We should skew development pathways accordingly.

Survey researchers are at risk, but strategic advisors stand to gain. We should skew development pathways accordingly.

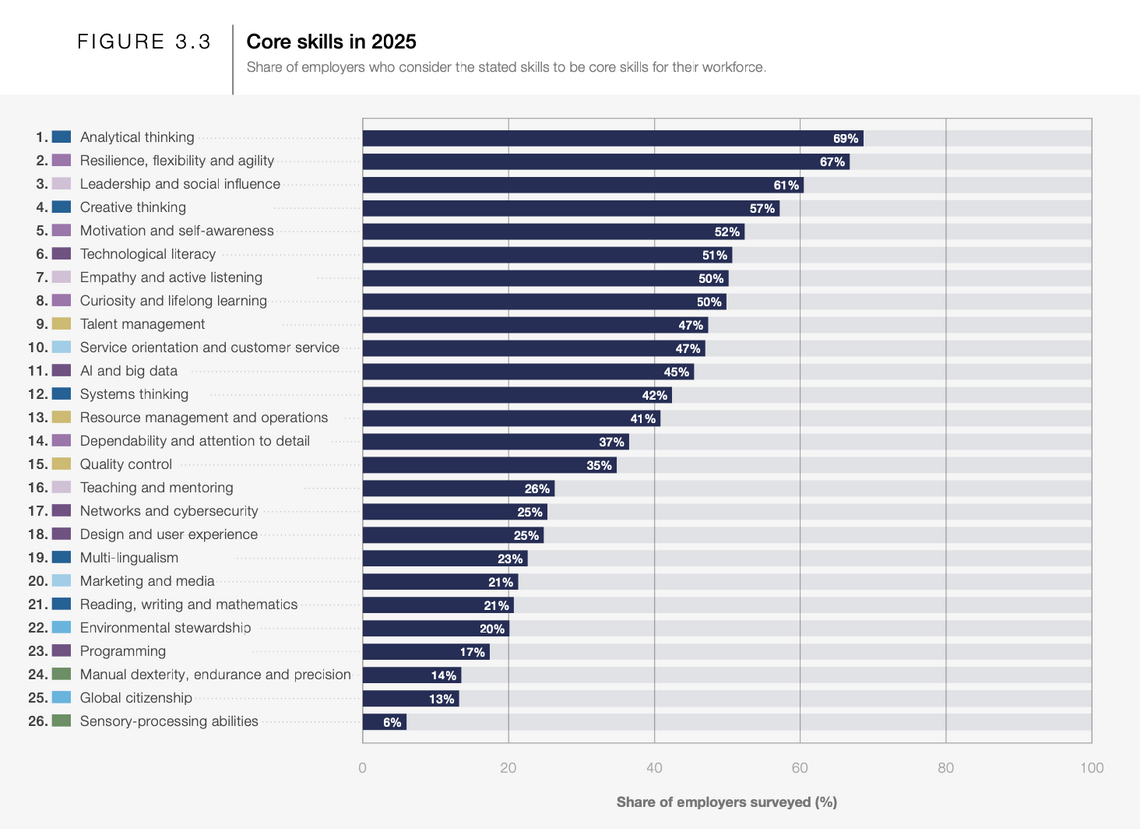

Analytical thinking tops the list of desirable skills in 2025 – how can we maximise and leverage this as an extant core skill in our industry?

Analytical thinking tops the list of desirable skills in 2025 – how can we maximise and leverage this as an extant core skill in our industry?

Skills pipped to rise in demand over the next 5 years include resilience, flexibility and creativity – despite growing pains, this bodes well for junior researchers thrust into change.

Skills pipped to rise in demand over the next 5 years include resilience, flexibility and creativity – despite growing pains, this bodes well for junior researchers thrust into change.

So, this is our best estimation about where we’re going – but how do we help young researchers get there? How do we proactively take them from the skillsets of today to those of tomorrow? Perhaps most confoundingly:

How do we train junior researchers to recognise ‘good’ in our industry’s work, if they’ve never done (and likely will never do) the work directly themselves?

To bring this to life, think back to how you learnt what a good interview screener looks and sounds like. Most likely, it was a skill honed over time through trial and error – drafting screeners and then receiving feedback on them from more senior colleagues. The sun is about to set on this kind of training experience, as AI will largely circumvent the need for juniors to write drafts– but then how will they recognise a good AI-generated screener from a bad one, and know which one to move forward with?

This remains an open question, one that we don’t know the answer to just yet. In part, it could involve increased shadowing of seniors so that juniors can vicariously learn what good looks like. There is no clear precedent, and thus one the industry must be open to exploring creatively.

As we feel out this mystery, valuable skills to hone in our juniors will include:

Managing AI tools: More IT and coding skills will be required than what is currently typical. Being able to navigate data warehouses, define AI agent tasks and identify the most appropriate model for the job will become the ‘legwork’ of tomorrow’s research.

Critical thinking: In a context where AI communicates with misleading overconfidence, juniors must have the ability (and conviction) to scrutinize and sense-make. Close reading, logic and inferring implications are needed.

Swift and accurate fact-checking: Juniors will be the first line of defence against seemingly trustworthy AI hallucinations. Fact-checking AI generations will require a bevvy of creative and time-efficient solutions that could include spot-testing data points, old-fashioned reading of key resources, interrogating AI about its output and searching for inconsistencies in its answers, cross-verifying generations with relevant experts/ groups with lived experience/other trustworthy human sources, running the same research task across multiple models and cross-checking the outputs.

Acting as the AI-human bridge. As the team-members likely closest to AI’s data processing, there is a stewardship JTBD in ensuring that the most valuable insights emerging from AI get translated throughout a research project. This is particularly key because it provides juniors with opportunities to develop communication and leadership skills.

Supporting self-direction

The above outlines what our industry should begin cultivating – but junior research professionals must take matters into their own hands too. In a climate of relentless ambiguity, the future very well might be what our youngest make of it. To leverage this, junior researchers should:

Actively engage with AI research tools

Pay attention to changes in AI weather and forecasting key trends

Closely collaborate with more experienced mentors for support in developing skills for the future (not just skills for today).

Prepare for the transition into a more tech-enabled role, and begin learning basic SQL, Python etc. To be clear, I do not believe we will all need to become coders. However, a basic ability to communicate with AI will become a hygiene factor.

Double down on human capabilities in data access that AI struggles with. This includes research that is necessarily embodied (for now) such as in-field ethnography – besides the overbearing investment in hardware required to roll-out robot field assistants anytime soon, there remains a question if this would ever be able to yield the same quality of data as human-to-human, face-to-face inquiry. Similarly, there are some kinds of research that often require building human relationships to initiate – for example, accessing elusive groups like the ultra-wealthy. With little public data available, these kinds of groups are difficult to study and require ‘knowing the right people’ to get a foot in the door. In terms of fostering these close relationships, young researchers certainly have the upper hand (rather, foot?) over AI.

Frame and develop soft skills and creativity as key ways to compliment, rather than compete with AI. Debate rages around the future value of human creativity and how it differs to the production of AI-generated creative assets. We might not eventually be able to compete in terms of pace or even quality of creative production, but focussing on the journey rather than the destination remains an important lens. When we relinquish interest in fostering creativity, what else do we lose? I’d rather not find out.

Finally, envision the future you want in an AI-enabled research career. What bold steps can you take to move towards it?

I asked some industry peers to creatively consider what awaits us in the AI future.

Marie-Claire Springham responded by making this gorgeous custom-made GIF:

And Felicity Clark with the thoughtful Sense of Healing : AI Data Sculpture by Refik Anadol:

“I feel this artwork literally visualises the collaboration of humanity and AI to create; a partnership where each parties potential is enhanced. Refik feeds the AI human-generated neurological data to create a fluid piece of artwork, showcasing the very dynamic between the roles of human and AI in the research practices of the future; one where human orchestration, direction, idea generation and real-world contextual application work alongside the generative and interpretative powers of AI.”

“I feel this artwork literally visualises the collaboration of humanity and AI to create; a partnership where each parties potential is enhanced. Refik feeds the AI human-generated neurological data to create a fluid piece of artwork, showcasing the very dynamic between the roles of human and AI in the research practices of the future; one where human orchestration, direction, idea generation and real-world contextual application work alongside the generative and interpretative powers of AI.”

As for my own projections, I will defer to the words of David Bowie:

There's a starman waiting in the sky

He'd like to come and meet us

But he thinks he'd blow our minds

There's a starman waiting in the sky

He's told us not to blow it

'Cause he knows it's all worthwhile

He told me

Let the children lose it

Let the children use it

Let all the children boogie

When I asked it the same, ChatGPT offered up this:

To conclude, the revolution will be televised, but junior researchers (and therein the future of our entire industry) will be much safer if we take part rather than watch.