Initial success, challenges, and applications

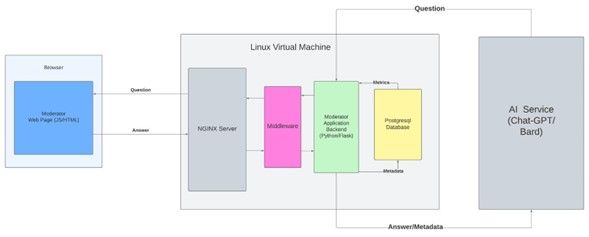

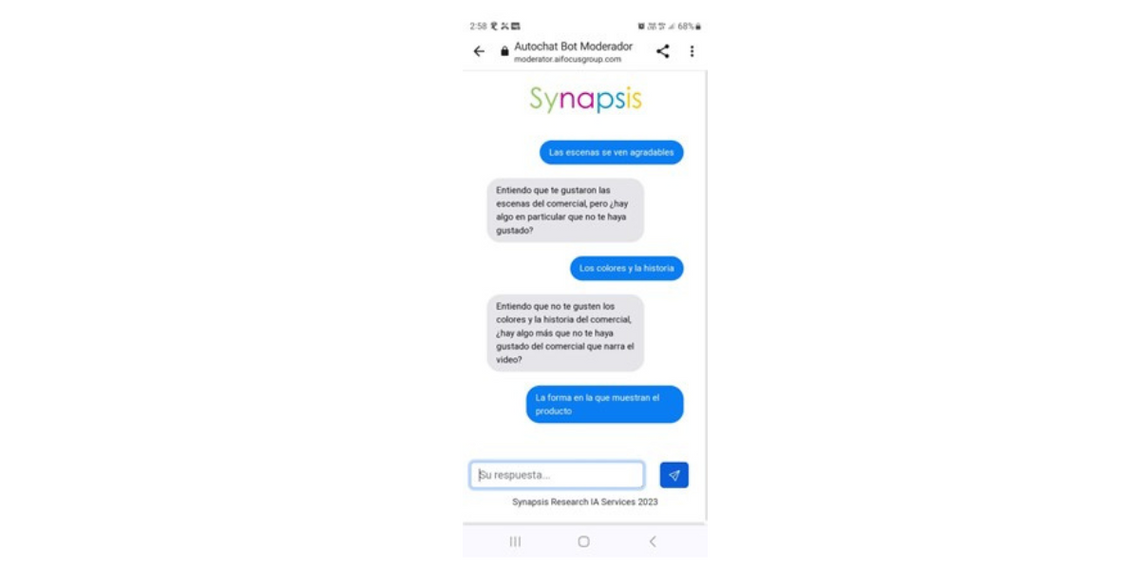

Initial testing revealed the tool's efficacy in handling unstructured data, crafting discussion guides, moderating in-depth interviews, converting audio to text, and analyzing textual content. Starting with interview moderation, the platform evolved into a dynamic chat that can engage in personalized conversations based on predefined questions. And even accommodate multimedia elements for evaluation purposes.

The more we started using Automoderator, the more challenges surfaced. This, in turn, reshaped our expectations. Clients, enticed by the term “artificial intelligence,” envisioned a system capable of fluent conversation with human-like behaviors. Explaining the nuanced nature of the system – more intelligent than a chatbot but not a substitute for a psychologist – became an initial source of frustration.

We conducted two pilot studies using Automoderator with clients experiencing real challenges:

One was with a beverage company interested in evaluating two animatics for an ad campaign, which were usually tested with quantitative methodologies and wanted to understand the reasons for open-ended questions more deeply. This involved 60 surveys among randomly selected participants from our client's own panel (average interview length 10 mins). Additionally, our client conducted simultaneous online quantitative sampling with 200 cases.

The other study was for a community services company that was interested in getting to know young adolescent users of their services at a qualitative level. This involved 36 x 1 hour interviews recruited by referrals.

Technical limitations arose throughout the stages of these projects, which we describe below.

Subjectivity in Question Depth: The system's depth of questioning relies on participant responses, posing challenges due to the impracticality of setting parameters for all possible terms.

Conditional Jumps Between Questions: Programming conditional jumps or branching logic proved unattainable. The system strictly adheres to a predetermined sequence of questions stored in an auxiliary file, making the customization of pathways too extensive.

Avoidance of Confrontation: AI systems record participant changes of opinion without delving into the underlying reasons. While this approach ensures neutrality, it falls short in exploring the motivations behind shifts in perspective.

Misinformation Topics: Engaging in conversations about topics prone to misinformation, such as climate change or vaccines, poses challenges for AI in providing accurate information. This limitation, though less relevant in typical market research discussions, becomes critical in health-related studies where misinformation can impact integrity

Branding Confusion: Even after specifying the brand, the AI can be confused by statements using the brand word in another sense, potentially misinterpreting the context of the conversation.

User Experience: Users interact with the platform through various devices, and interruptions in the connection between users and AI present challenges, necessitating anticipation and addressing scenarios like internet signal disruptions or AI overload to ensure a seamless user experience. Additionally, managing user expectations regarding the AI's capabilities, such as inquiries about ending the conversation, is crucial.

Managing Complexity and Enhancing AI Integration:

Ongoing development and refinement is crucial when navigating these kinds of technical limitations. Tackling misinformation challenges requires continuous updates to the knowledge base, ensuring the AI remains well-informed on evolving topics. Addressing branding confusion involves refining the system's contextual understanding, distinguishing between brand-related discussions and general language use.

Moreover, optimizing user experience necessitates proactive measures, including robust error handling for connectivity issues and clear communication to users about the AI's capabilities and limitations. As technology evolves, the integration of user feedback becomes invaluable, guiding developers in enhancing the platform's responsiveness and adaptability.