A new hybrid methodology: how AI-moderated interviews are redefining research

Surveys are falling short. A new method, AI-moderated interviews or AIMI, is proving that the future of research is voice, not checkboxes.

In short:

AI-moderated interviews (AIMIs) significantly outperform traditional surveys in qualitative metrics like depth, relevance, and engagement.

AIMIs use voice interaction and contextual follow-ups to simulate a real conversation, resulting in fewer gibberish responses and richer data.

Researchers keep full control over design and analysis while benefiting from adaptive, scalable interviews.

Traditional surveys are showing serious strain: static logic, low-quality answers, and rising fatigue.

AIMIs represent the next leap forward in research: more human, more scalable, more insightful.

Static tools for a dynamic world

Surveys were the best we had until now. Built for scale and simplicity, they’ve long served as the industry’s default tool. But their limits have become impossible to ignore.

Survey fatigue is growing. Drop-out rates are high. Fraud is everywhere. Worse still, qualitative questions are often met with one-word responses or worse: copy-paste filler, nonsense, or evasive "I don't know" replies. The format isn’t broken because researchers lack skill, it’s failing because the medium is static in a world that demands interaction.

We’re not only talking about attention spans, it’s about the mismatch between complex human experiences and checkbox logic. We’ve optimized the question, now it’s time to optimize how we ask it.

The shift: from designing questions to asking them

Surveys were the best we had until now. Built for scale and simplicity, they’ve long served as the industry’s default tool but their limits have become impossible to ignore.

Survey fatigue is growing, drop-out rates are high and fraud is everywhere. Worse still, qualitative questions are often met with one-word responses or worse: copy-paste filler, nonsense, or evasive "I don't know" replies. The format isn’t broken because researchers lack skill, it’s failing because the medium is static in a world that demands interaction.

We’re not only talking about attention spans, we’re talking about the mismatch between complex human experiences and checkbox logic. We’ve optimized the question, now it’s time to optimize how we ask it.

A new hybrid methodology: what makes AIMIs different

AI-moderated interviews (AIMIs) are voice-enabled, adaptive conversations that simulate the probing style of a skilled qualitative interviewer. They don’t just read questions: they ask them, and then they listen.

What sets AIMIs apart isn’t just the use of voice, it’s the ability to ask dynamic follow-up questions in real-time. It’s contextual conversation, powered by large language models and designed for human-like nuance.

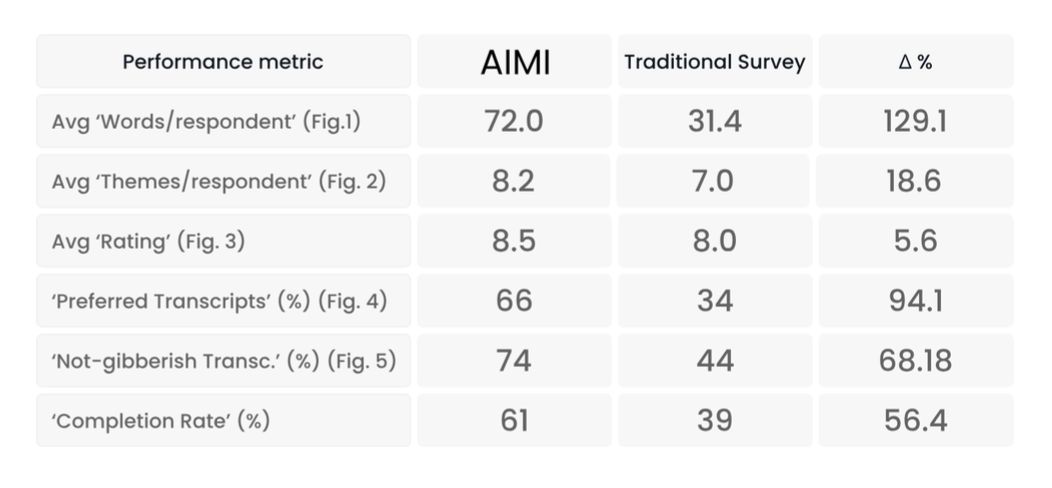

According to a comparative study involving 200 balanced participants (100 AIMI, 100 survey), AIMIs were built with the exact same questions as their static counterparts. The only difference? Format and intelligence.

AIMIs outperform surveys

This study investigates the efficacy of an AI-moderated interviewer in eliciting richer and more insightful qualitative data compared to traditional web-based static surveys. A comparative experiment was conducted with two balanced groups of 100 participants each. One group completed a traditional static survey hosted by a widely used platform, while the other experienced an AI-moderated interview (AIMI) incorporating voice interaction and dynamic follow-up questions.

The analysis, leveraging a large language model for thematic and quality assessment, revealed that AIMIs:

Yield significantly higher word counts

Extract a greater number of themes

Are overwhelmingly preferred for transcript quality

Achieve higher completion rates

Generate fewer gibberish responses

These outcomes were measured using uniform prompts and evaluation methods across both formats to ensure comparability. The use of voice interaction and contextual follow-ups in AIMIs emerged as the key differentiators responsible for these performance improvements.

Here's a summary of the core metrics:

Let’s unpack what this means:

More words = richer narratives and more insight to analyze.

More themes = better segmentation, depth, and discovery.

Fewer gibberish answers = cleaner data, less fraud, and higher ROI.

Higher completion rates = better participant engagement—even when using a new format.

Transcript quality was assessed using LLM-driven scoring based on:

Contextual relevance

Depth of reasoning

Presence of serial-cheating or evasion

AIMIs outperformed static surveys across all three dimensions; in 66% of direct transcript comparisons, AIMIs were judged superior.

Why it works: the science behind the shift

Two key elements drive AIMI performance:

Voice interaction: conversational flow mimics a real interview, not a form. It keeps participants attentive and engaged.

Dynamic follow-ups: AIMIs ask for clarification and probing.

Active fraud prevention: AI-native software can detect live during the interview if a respondent isn’t engaged or is giving inconsistent responses.

Every answer is evaluated for adequacy and depth in real-time. If it’s vague, the AIMI gently probes further. If it’s strong, the interview moves on. It’s the closest thing to sitting across from a skilled qualitative moderator, at scale.

This adaptive logic is what makes AIMIs resilient to gibberish and fraud: respondents can’t just rush through: they’re pulled into the interaction. This naturally filters out low-effort participants and boosts response quality.

What researchers gain (and don’t lose)

This isn’t about automating researchers out of the process. It’s about augmenting their work.

You still design the questions.

You still own the tone and intent.

You still have control on the analysis.

But now, instead of hard-coding endless logic paths or guessing at every follow-up, you can focus on insight, not infrastructure.

Conclusion notes

We’ve long treated participants like data-entry clerks, AIMIs flip that script. Now, they’re contributors to a conversation, active agents in the research process. This shift mirrors the broader future of research: more adaptive, more humane, more aligned with how people actually think and speak. AI-moderated interviews outperform traditional surveys across depth, quality, and engagement. They reduce fraud, feel more natural and most importantly, they help us understand peoplenot just count them.

AIMIs don’t replace the researcher, replace the rigidity. Researchers remain in control but with smarter tools.

For researchers looking to go deeper without losing control, AIMIs are the next technology worth adopting.

This article is based on findings from a comparative study conducted using Glaut AI-native moderated voice interviews. Full paper available here.

Matteo Cera

CEO & Founder at GlautGlaut Founder & CEO. Matteo Cera is the CEO and co-founder of Glaut, an AI-native Market Research Platform for experienced researchers. Since 2023, Glaut has pioneered AIMIs, a hybrid data collection methodology based on AI-moderated voice interviews, in +50 languages. Glaut software allows researchers to program and run large-scale research projects, and analyze qual insights at scale. With over 15 years experience in tech, Matteo began his career at McKinsey & Company and graduated summa cum laude from Bocconi University.