Balancing Innovation with Integrity: How Global Networks Are Shaping the Future of Insight, through AI

AI is now a vital force in research, improving predictive analytics and coding. Our Global WiNdow blog highlights insights from WIN members on the importance of integrating human judgment with ethical practices.

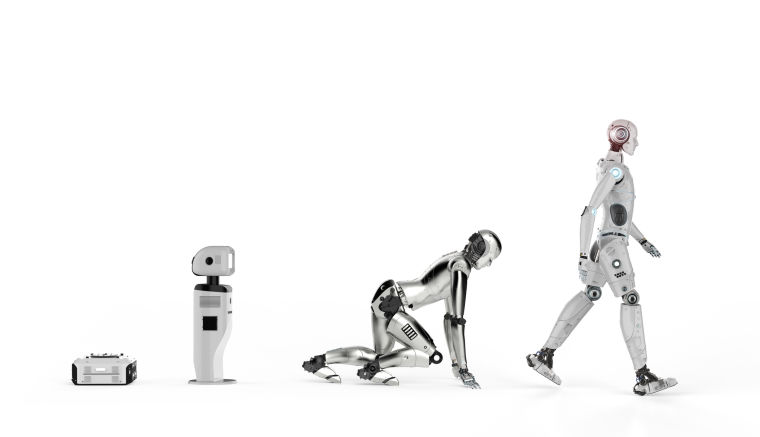

Over the past few years, AI has been introduced to the industry as a distant disruptor, new but with endless potential. Now, we’re quickly seeing the tool as an ever-present force, rapidly reshaping research.

From predictive analytics to automated coding, AI is transforming how we gather, interpret, and deliver insight. As researchers, however, we do face a critical challenge: how do we embrace innovation without compromising the integrity that defines our profession, which focuses so much on accuracy and truth?

This question sits at the heart of our latest blog edition of Global WiNdow, where we gathered perspectives from our WIN members across Peru, Greece, Argentina, China, Italy, Spain, Ecuador, Vietnam, Germany, Sweden, Slovenia, and Malaysia. What emerged from this was a clear consensus: the future of insight depends heavily on the thoughtful integration of human judgment and a commitment to upholding ethical frameworks that support transparency and protect cultural values.

Local Frameworks, Global Responsibility

Across the WIN network, we’re seeing a patchwork of regulatory responses to AI, some robust while others are still emerging. For example, in Peru, recent updates to the Personal Data Protection Regulation are laying the groundwork for ‘Responsible AI’ use. This regulation mandates transparency when using automation to make decisions as well as frequent data and breach reporting. This is something becoming more common all across the world, which is why it’s no surprise that Italy’s latest draft AI legislation should align with the larger EU AI Act, released in July of last year. Italy’s local legislation does, however, add national nuances around democratic integrity and cultural values.

On the other side of the world, China’s approach is more centralised, with state-led ethical governance and comprehensive policies like the New Generation AI Ethics Guidelines. Meanwhile, countries like Slovenia and Ecuador are still waiting for structured frameworks, highlighting the uneven pace of regulatory development.

You may ask, ‘Why do all of these local differences matter?’ They matter because they shape how researchers apply AI responsibly on a global basis and highlight the need for worldwide collaboration in order to achieve consistent ethical harmony across borders. We are, after all, one industry, and two million heads are better than one.

Human Context Is Still King

Despite AI’s growing capabilities, WIN members are united in their belief that human interpretation remains essential. As Urpi Torrado from Peru puts it, “Responsible AI use in research requires us to retain a strong degree of human judgment to preserve the authenticity and ‘soul’ of our participants’ voices.” Likewise, Constanza Cilley from Argentina echoes the same: “AI is a powerful assistant, but not a replacement. The central role in making sense of findings still belongs to humans.”

Whether it’s qualitative nuance or strategic consulting, researchers must continue to lean into the aspects that machines can’t replicate, such as context, empathy, and discernment. Maintaining human oversight is essential to ensure insights are interpreted correctly; raw AI data must never be activated without being thoroughly checked through traditional methods. Estefanía Clavero from Spain highlighted the importance of this, is to maintain value and control the tone and narrative of our data stories, ensuring it’s kept within the project’s context.

This combination of automated analysis and expert judgment enables more informed and responsible decision-making. A huge part of insights is truth and credibility so in order to keep delivering this level of value, a middle ground or ‘sweet’ spot must be found. Automation can kick the process off, but ultimately, the result must go through various levels of human-led fine-tuning and quality checks.

The Importance of Transparency and why Building Trust is Necessary.

Client attitudes toward AI vary, as our members have experienced first-hand. In China, Sweden, and Greece, curiosity often outweighs concern. Researchers are proactively sharing how AI enhances analytical rigour, with clients keen to see further use of it. Barry Tse from China reports that ‘Many are intrigued, or even reassured, when they learn that AI has been deployed to enhance efficiency.’ This, of course, could have a lot to do with the way the team has built trust through transparency, attributing their wins to the use of AI tools, clearly and consistently.

Alternatively, in Malaysia and Vietnam, some clients are more cautious—demanding disclaimers, oversight, and a greater investment in education around data sources and model training. Wai Yu See Toh, from Malaysia, warns that many clients lack understanding of how AI models are trained and what data sources are used. It’s true that without this knowledge, it’s difficult to assess the reliability of AI-generated insights. Xavier Depouilly also notes that, in Vietnam, some clients prohibit AI use altogether or request detailed disclosures on its application.

This divergence reveals a deeper issue: many clients need more education to critically assess AI-driven insights. In some parts of the world, AI as an everyday tool is still an early concept, and, as we’ve seen previously, it can take some time to grow comfortable when using. As researchers, we must bridge this gap, not just by using AI responsibly, but by communicating its role clearly both internally and externally.

How WIN as a Global group, is enhancing AI usage.

On a global scale, we at WIN are embracing AI as a catalyst for smarter, more responsible research, rather than an uncontrollable force, storming the industry. To maintain the collaboration between innovation and human insight, we are fostering worldwide usage by sharing ethical best practices and equipping local researchers with the tools to navigate AI confidently. Our aim is to help shape a future where innovation is grounded in trust. As technology evolves, our collective commitment to transparency, education, and cultural nuance ensures that insight remains both human-centric and future-ready. Driving automated discovery that is grounded in human integrity.

Richard Colwell

Chair at WIN NetworkRichard has spent more than 30 years in the market research industry, and is well respected member of the community, having secured Insight 250 awards in 2021, 2023 and 2024. He founded RED C Research in Ireland in 2003, and the group has now grown to be the largest independent full service agency in Ireland, while also having a strong footprint in the UK market. Richard is President of the WIN network, a collaboration of 45 independent research and polling agencies worldwide, a past Chairman of AIMRO (Association of Irish Market Research Organizations) and was the Irish representative for ESOMAR for over 10 years until earlier this month.